Real-time deletes

Real-time deletes allow you to delete existing rows in a table, which are immediately excluded when querying.Use cases

- Delete rows in a table and immediately exclude them from queries

- Delete entities (users, accounts, etc.) or dimensions

How it works

Real-time deletes in Propel are supported by ClickHouse’s ReplacingMergeTree table engine. Deletes do not happen transactionally. Instead, they happen asynchronously, where Propel uses a combination of filter-on-read and background jobs to perform the deletes. When a row is deleted, a new record is written with an internal_propel_is_deleted column set to true. Propel deletes these records in the background, asynchronously. To ensure that deleted data is never returned in queries, Propel automatically performs a filter-on-read when querying ReplacingMergeTree tables. This ensures that no deleted record is returned, even if the background delete operation has not yet occurred.

However, it’s important to note that there are two implications:

- The row count in ReplacingMergeTree tables may be larger than the logical number of rows. This is because there may be duplicate rows in the table that haven’t been deleted yet. These rows will be de-duplicated at query time.

- Filtering deleted records at query time has performance implications on read operations. This can be compensated using a larger Propeller.

Create a Data Pool to handle real-time deletes

In this section, we will guide you through the process of creating a Data Pool that can handle real-time deletes. We will provide step-by-step instructions on how to create it via the Console, API, and Terraform.- Console

- API

- Terraform

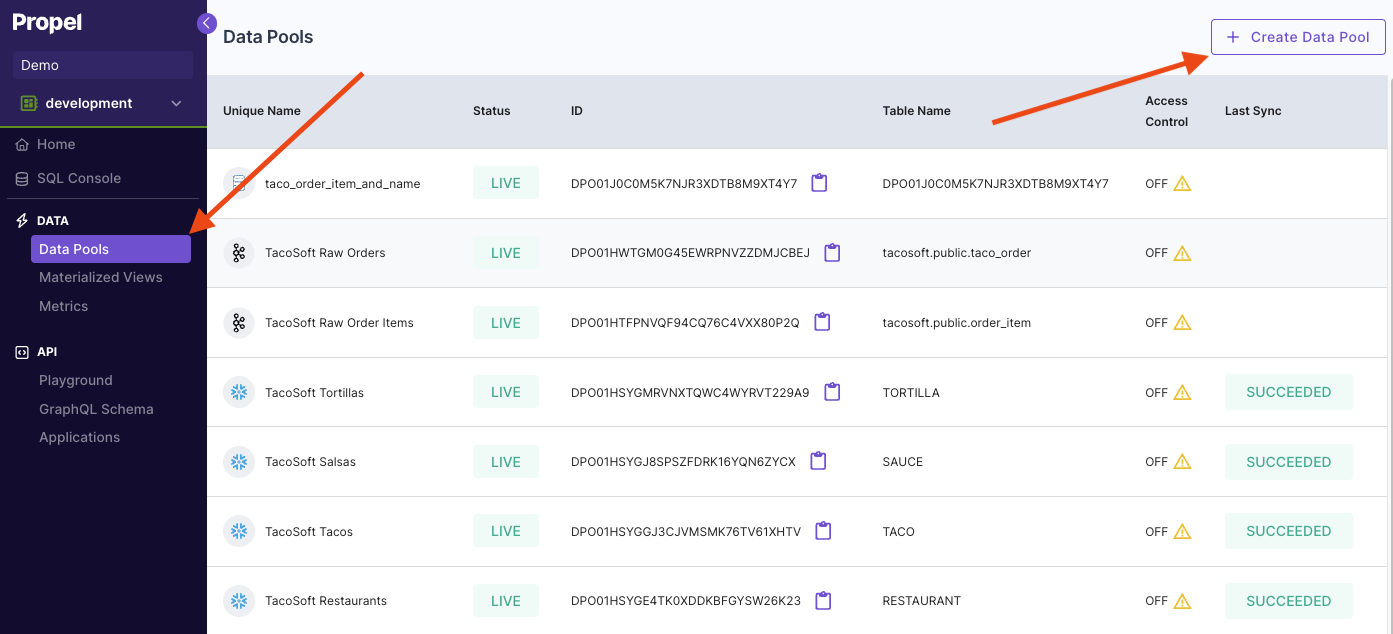

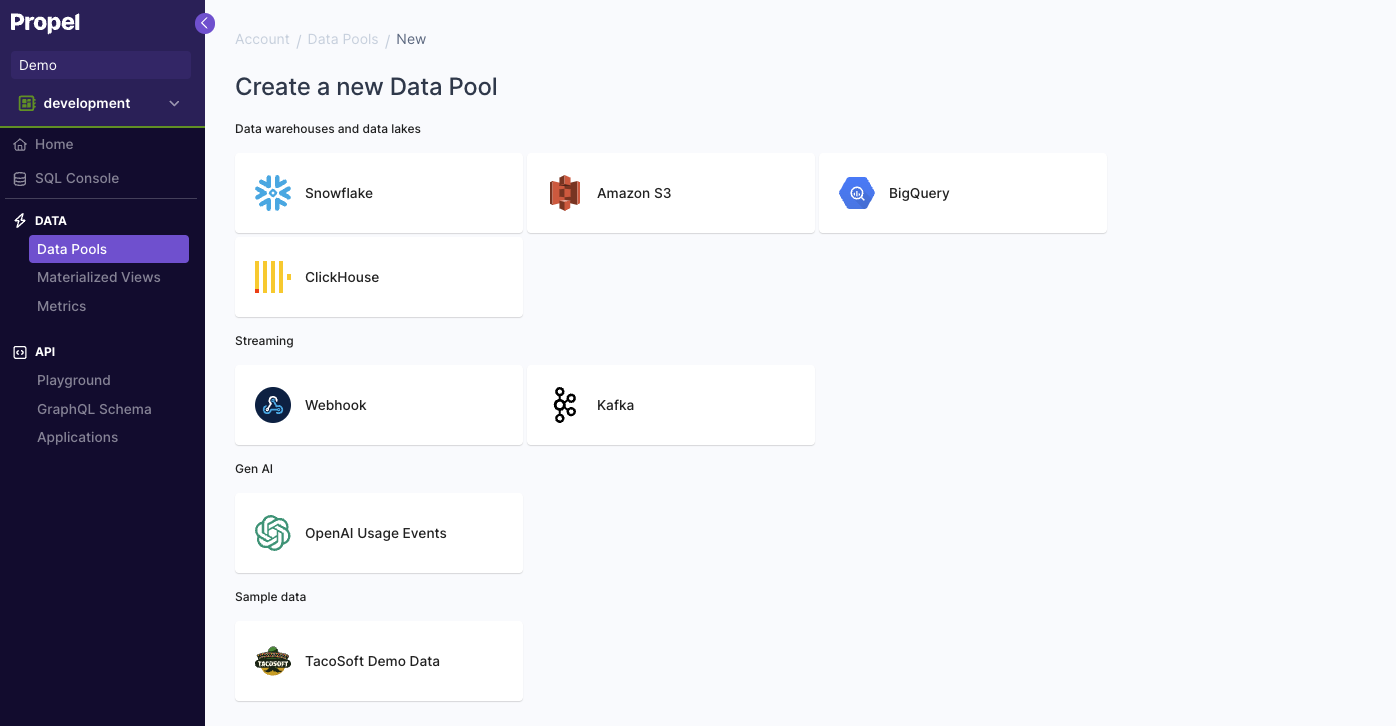

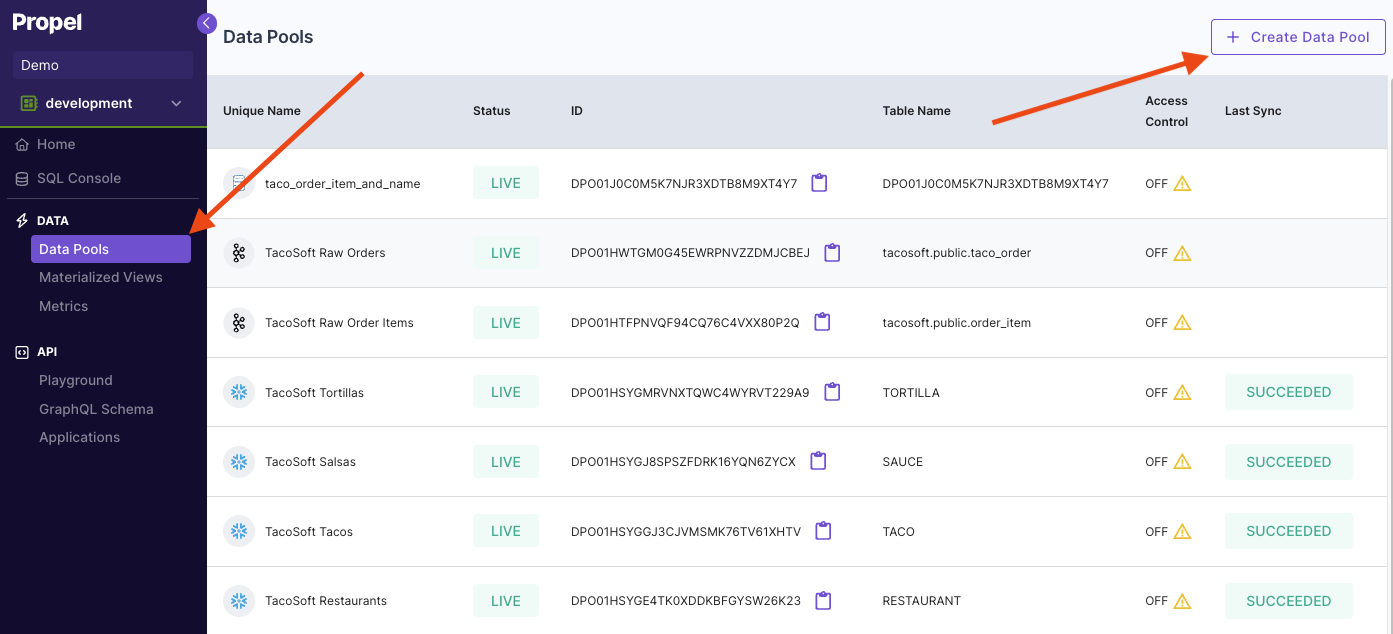

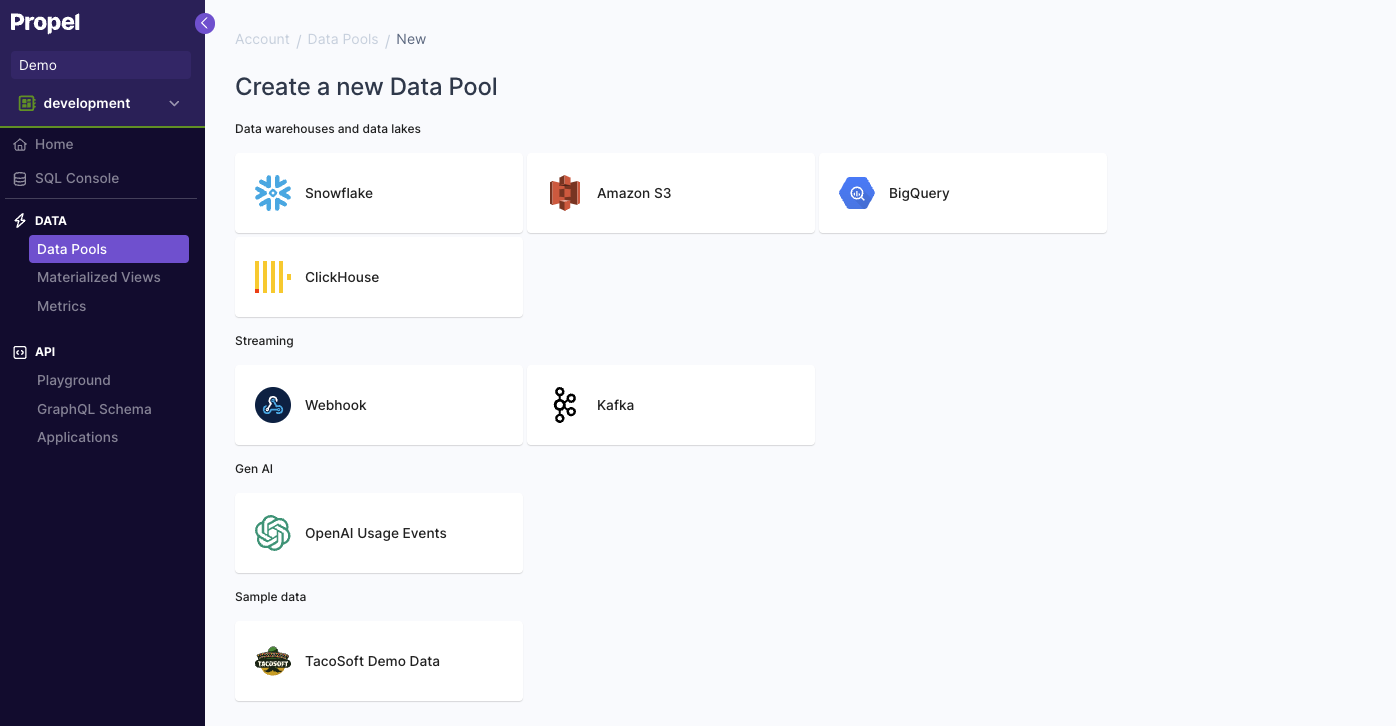

To create a Data Pool that can handle real-time deletes in Console, first go to the “Data Pools” section, then click “Create Data Pool”. Select the Data Pool type you need to create.

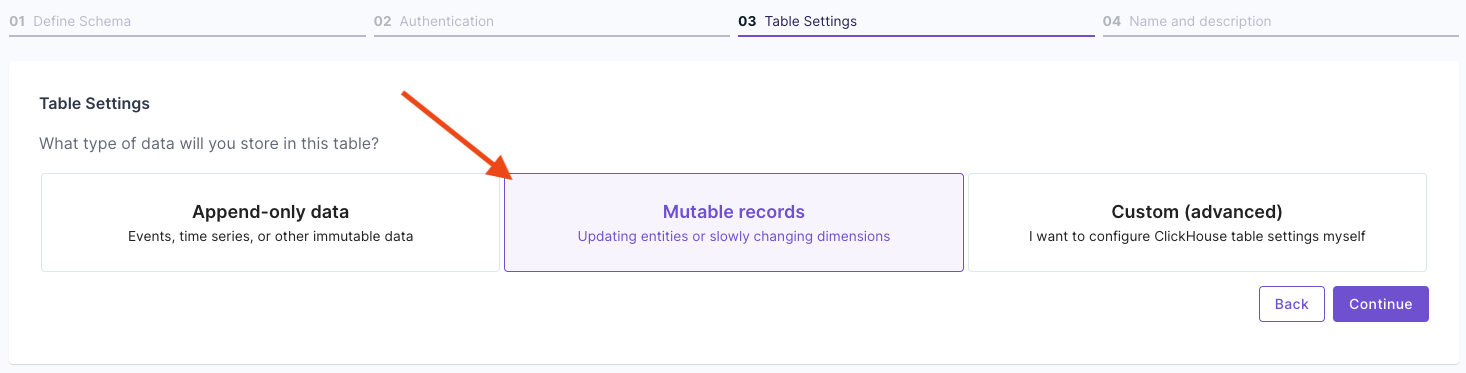

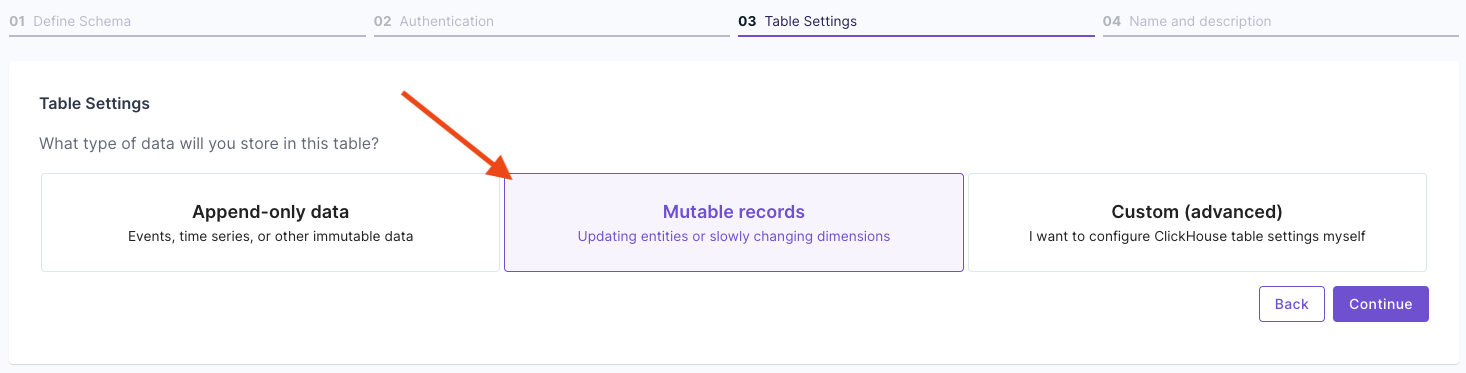

Select the Data Pool type you need to create. In the “Table Settings” step, select “Mutable Data”.

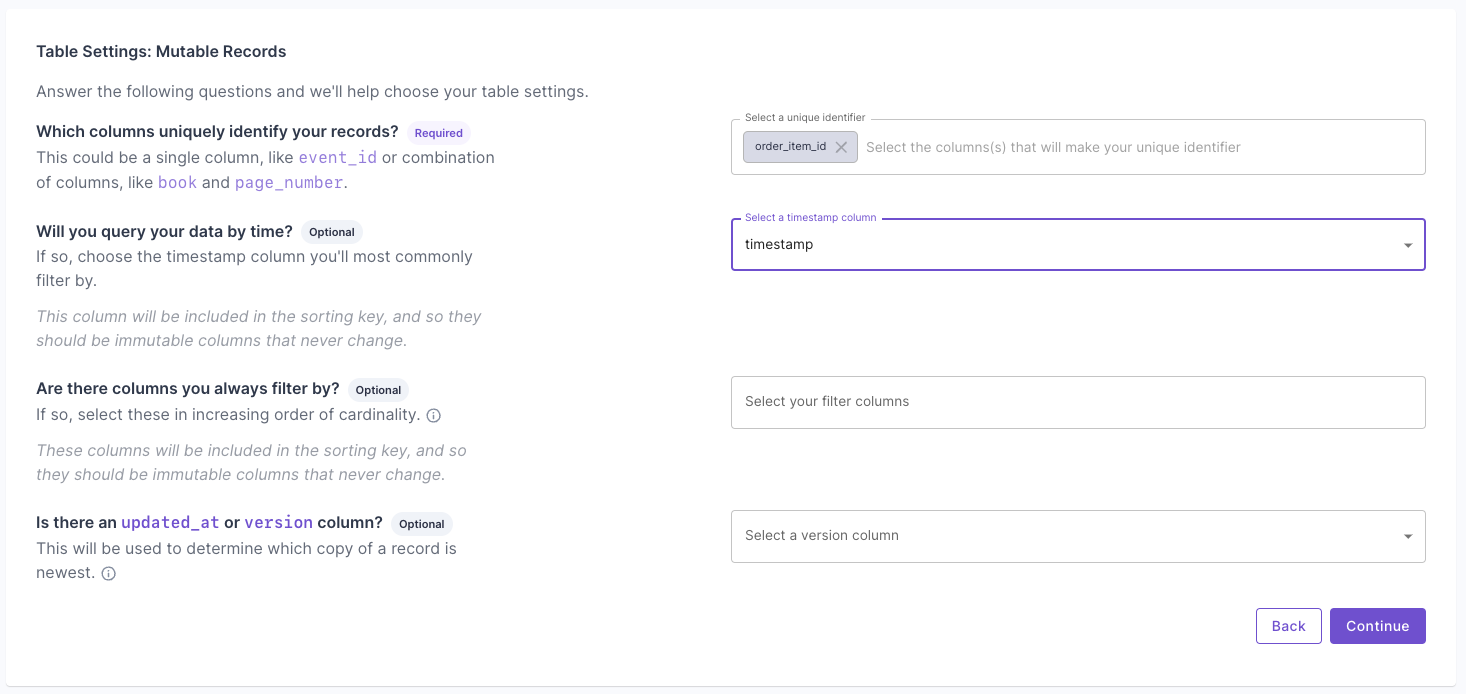

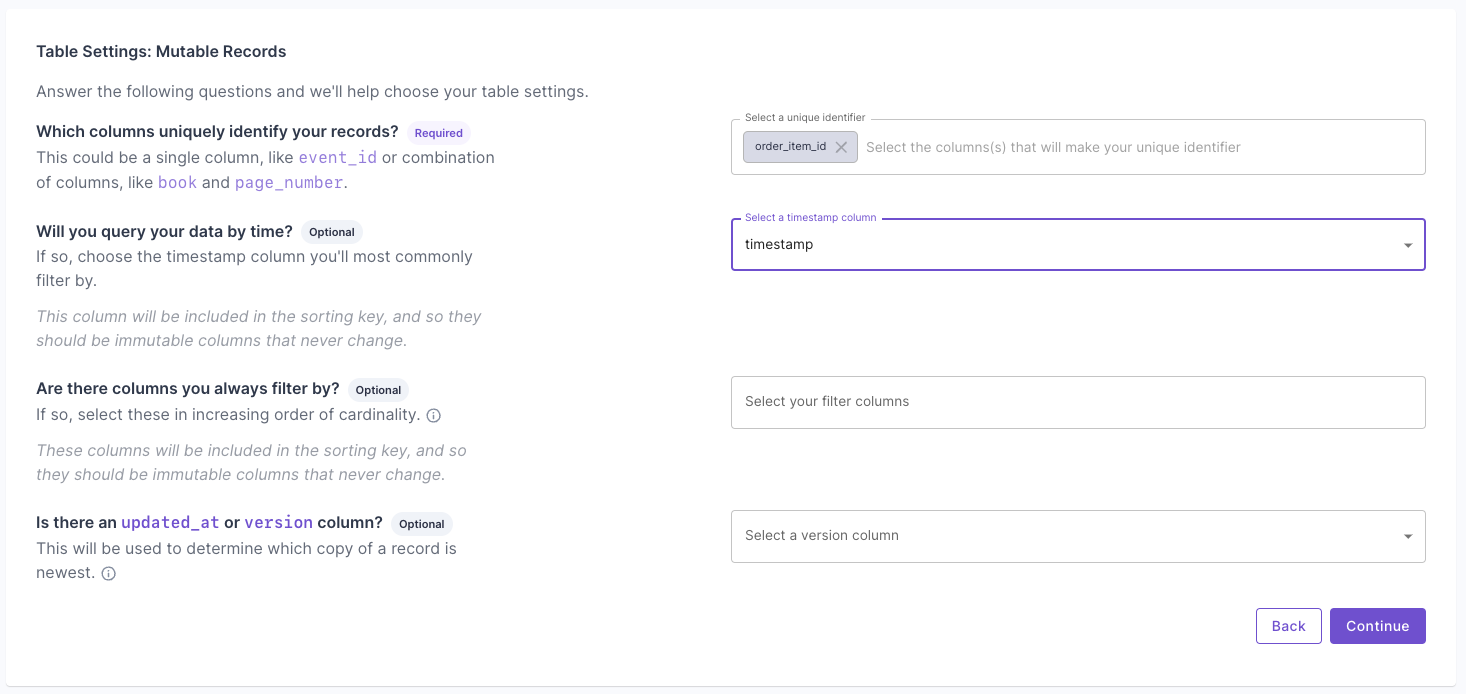

In the “Table Settings” step, select “Mutable Data”. Answer the questions on how to uniquely identify records and your query patterns. For more information, read the “Mutable records” section of the How to select a table engine and sorting key guide.

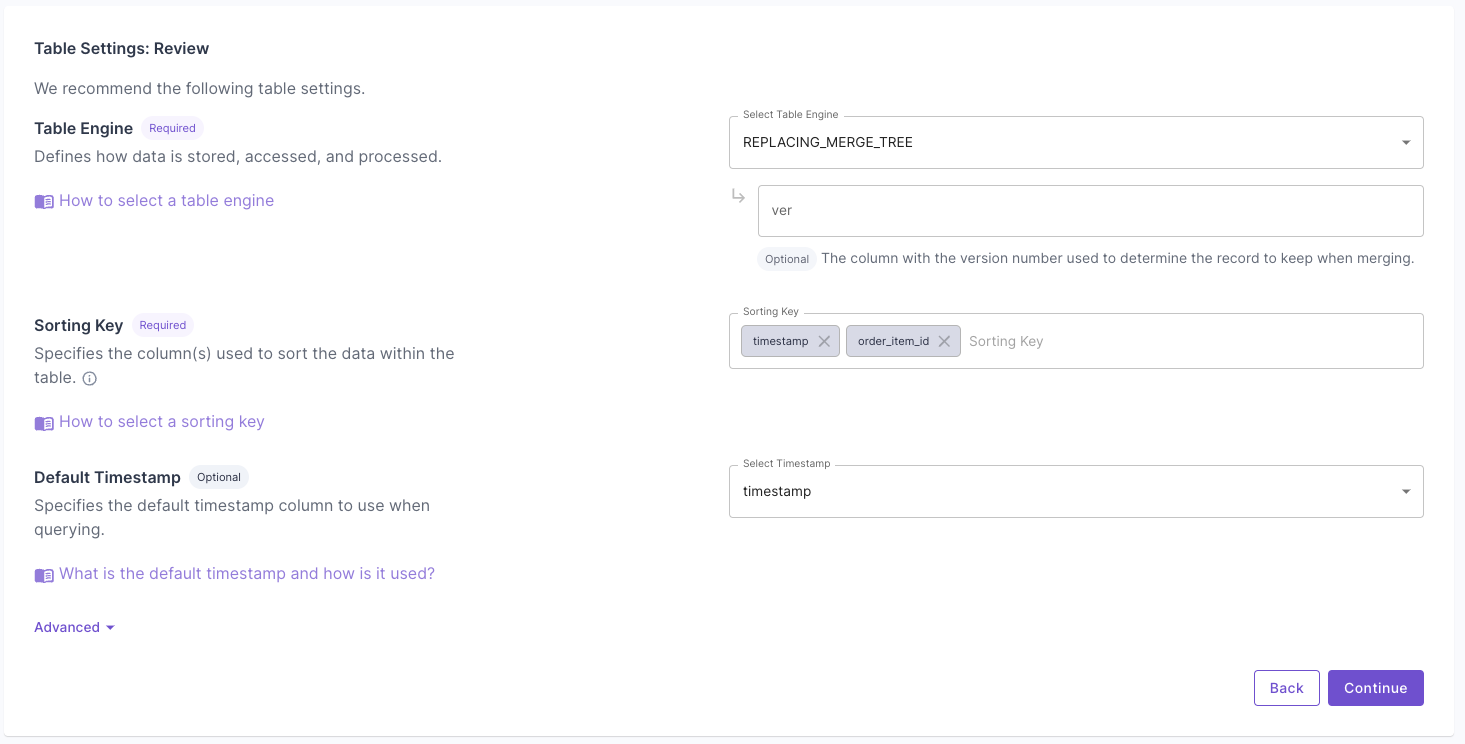

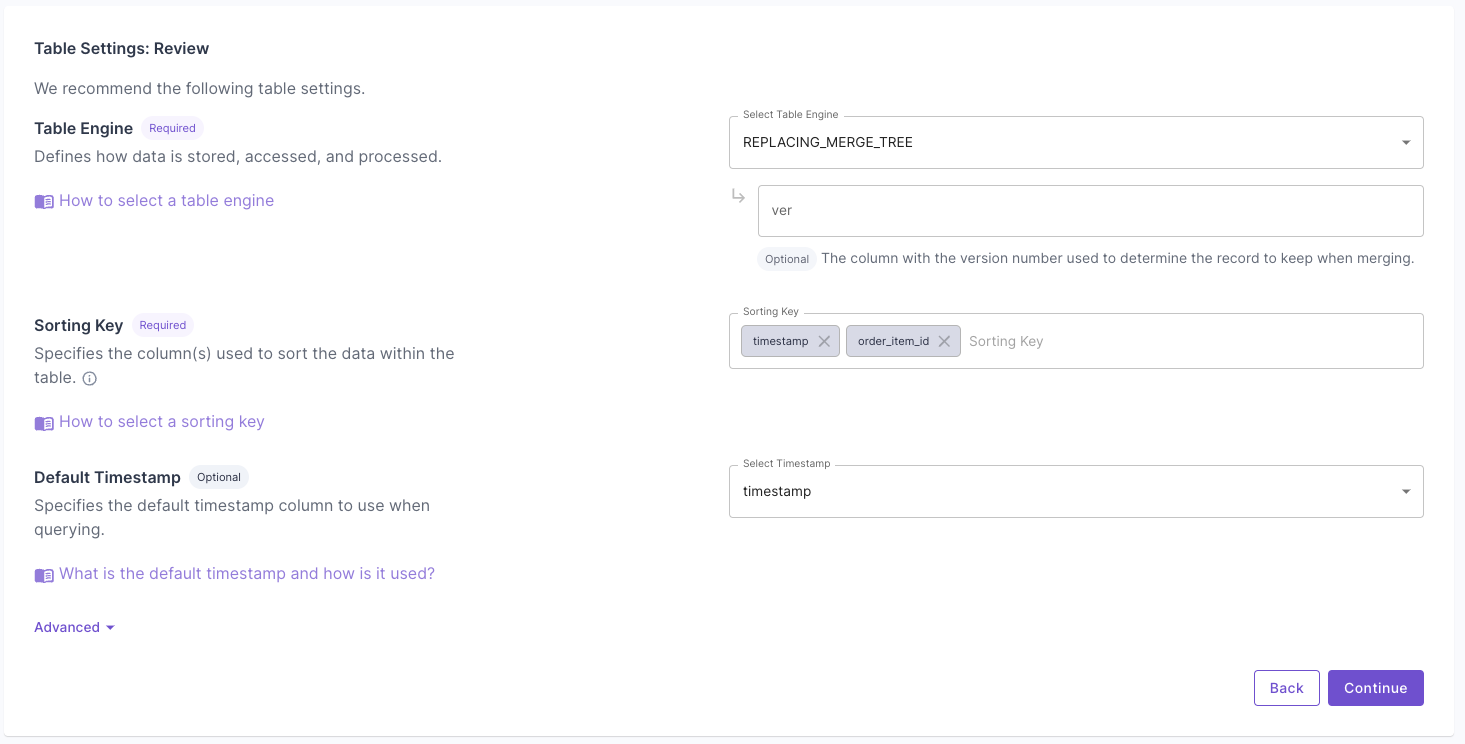

Answer the questions on how to uniquely identify records and your query patterns. For more information, read the “Mutable records” section of the How to select a table engine and sorting key guide. Next, you will get the suggested table settings. Note that the table engine is ReplacingMergeTree, and the sorting key determines the uniqueness of the row.

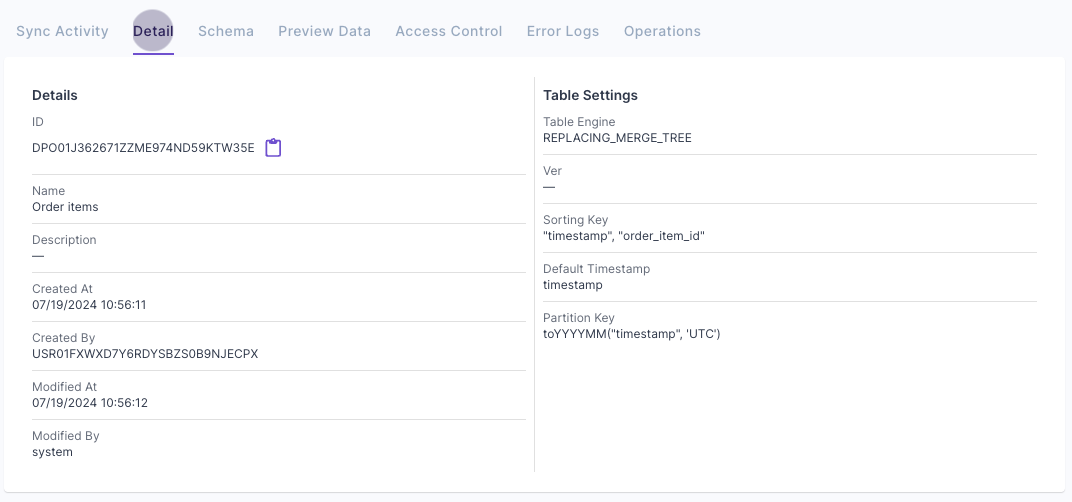

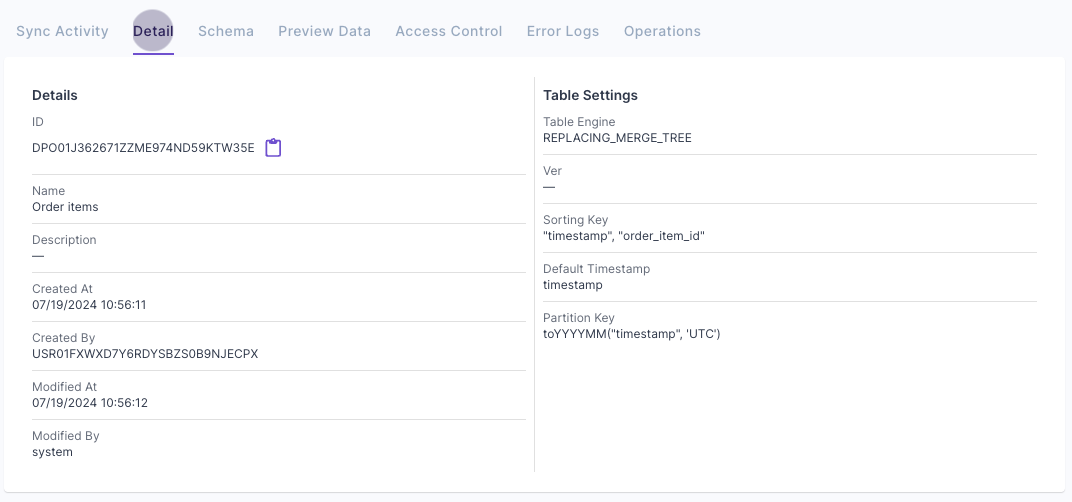

Next, you will get the suggested table settings. Note that the table engine is ReplacingMergeTree, and the sorting key determines the uniqueness of the row. Click “Next” to finish setting up the Data Pool. Once it is created, you can go to the “Details” tab and verify the settings.

Click “Next” to finish setting up the Data Pool. Once it is created, you can go to the “Details” tab and verify the settings.

Propel's Data Pools Console page

Create new Data Pool

ClickHouse table settings configuration

ClickHouse table settings configuration questions

Suggested ClickHouse table settings

Data Pool details

Batch deletes

Batch deletes are useful for one-off or automated jobs where you need to delete many rows in a table.Use cases

- Deletes for GDPR compliance

- One-off deletes of incorrect data

How it works

Batch deletes in Propel are supported by table mutations in ClickHouse. When you perform a batch delete, Propel issues anALTER TABLE … DELETE statement to ClickHouse and monitors its progress. The mutation proceeds part-by-part, partition-by-partition, deleting rows in the table.

Propel provides a simple way to data data asynchronously using the Console or the createDeletionJob API.

Create a batch delete job

In this section, we guide you through the process of creating a batch delete job via the Console and API.- Console

- API

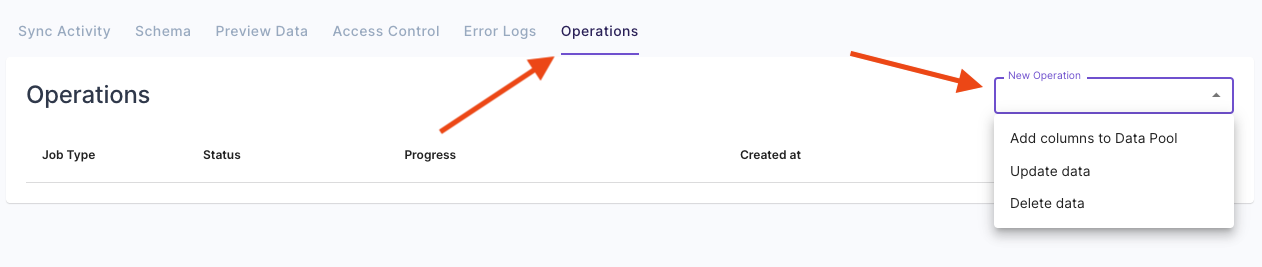

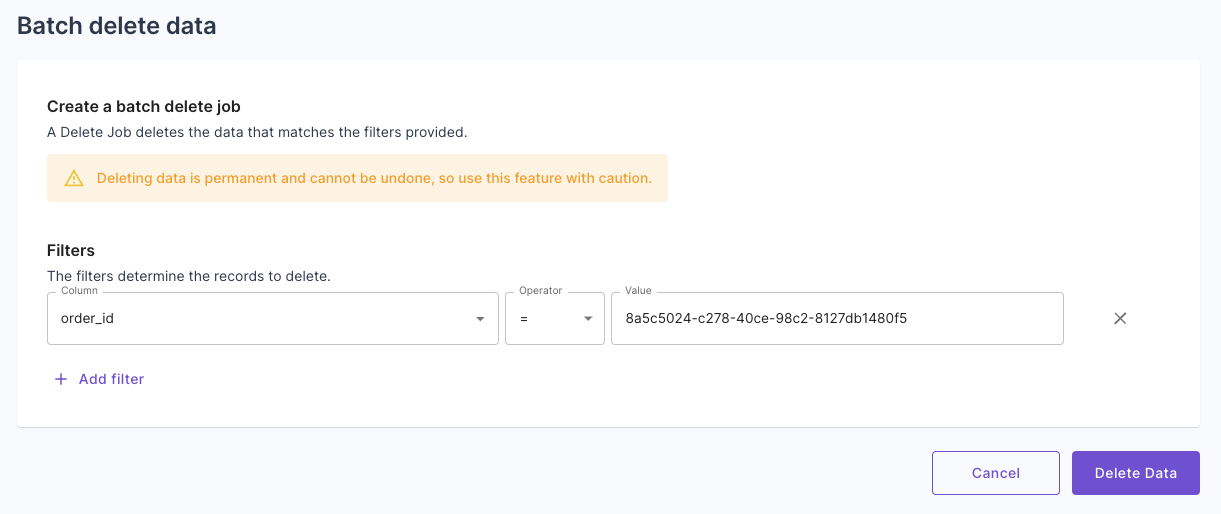

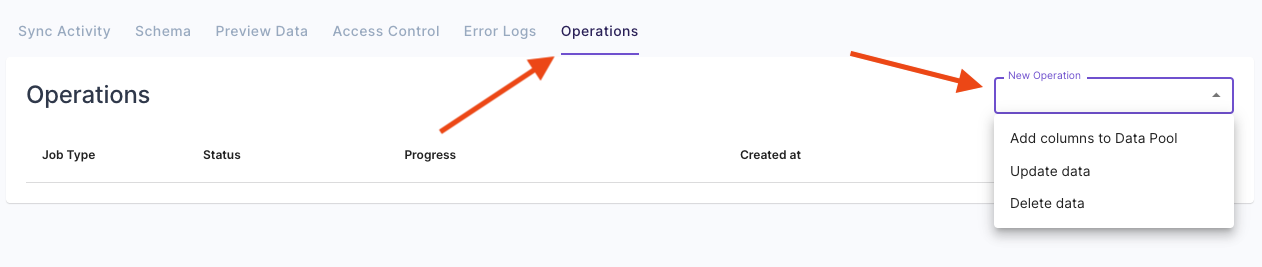

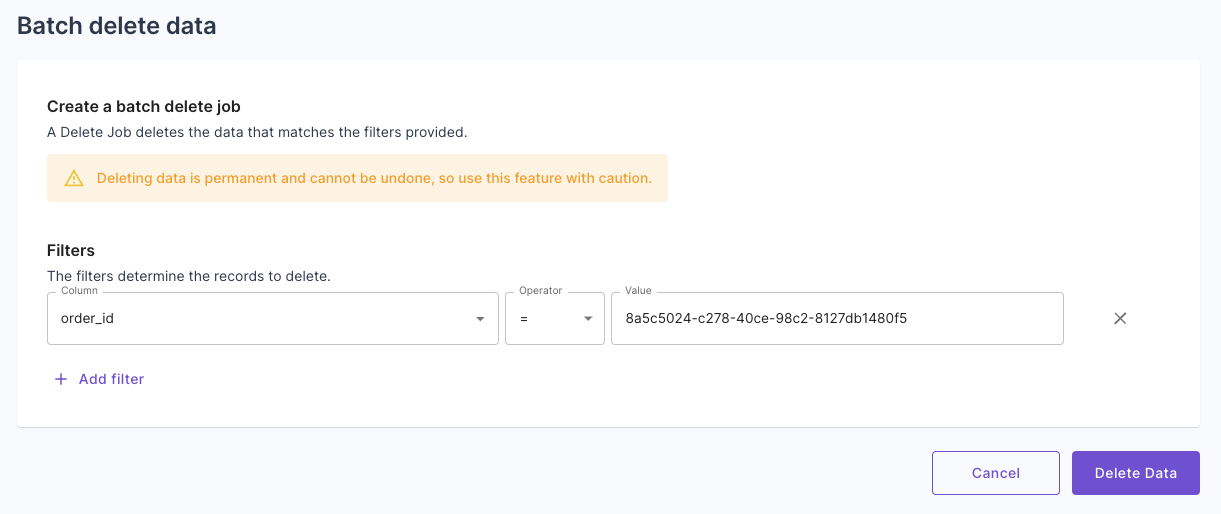

You can initiate a delete job in the Console by navigating to the Data Pool from which you need to delete data, clicking on the “Operations” tab, and then clicking “Delete data”. Here, you can specify the filters of the data to delete.

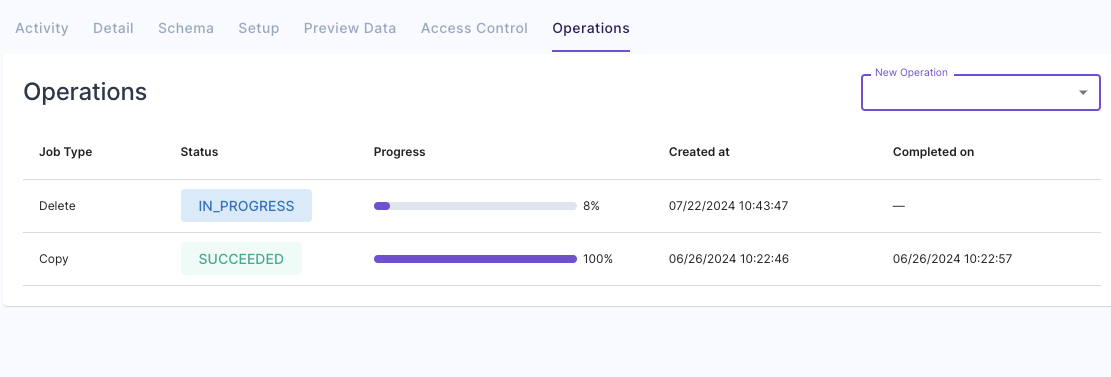

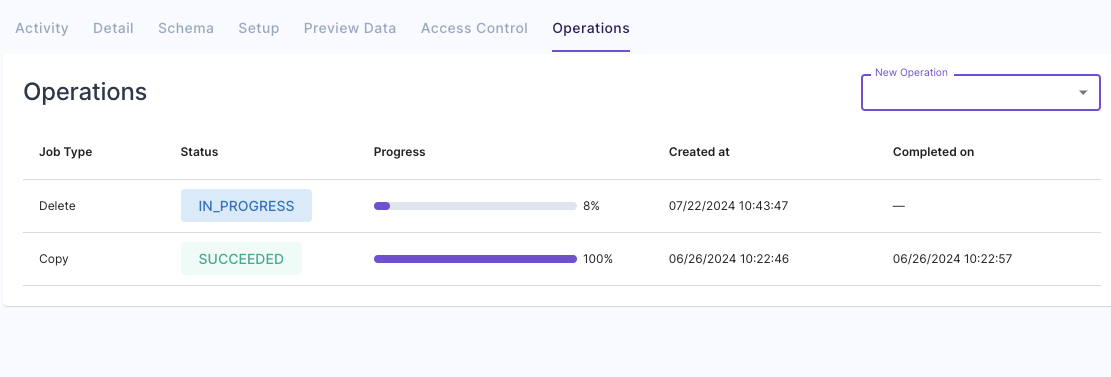

Here, you can specify the filters of the data to delete. Lastly, you can see the progress of the delete job and when it is completed.

Lastly, you can see the progress of the delete job and when it is completed.

Deleting data

Deleting data in Console

Monitoring a delete job in Console

Remember, deleting data is permanent and cannot be undone, so use this feature cautiously.