Get started with Amazon Data Firehose

Step-by-step instructions to ingest an Amazon Data Firehose stream to Propel.

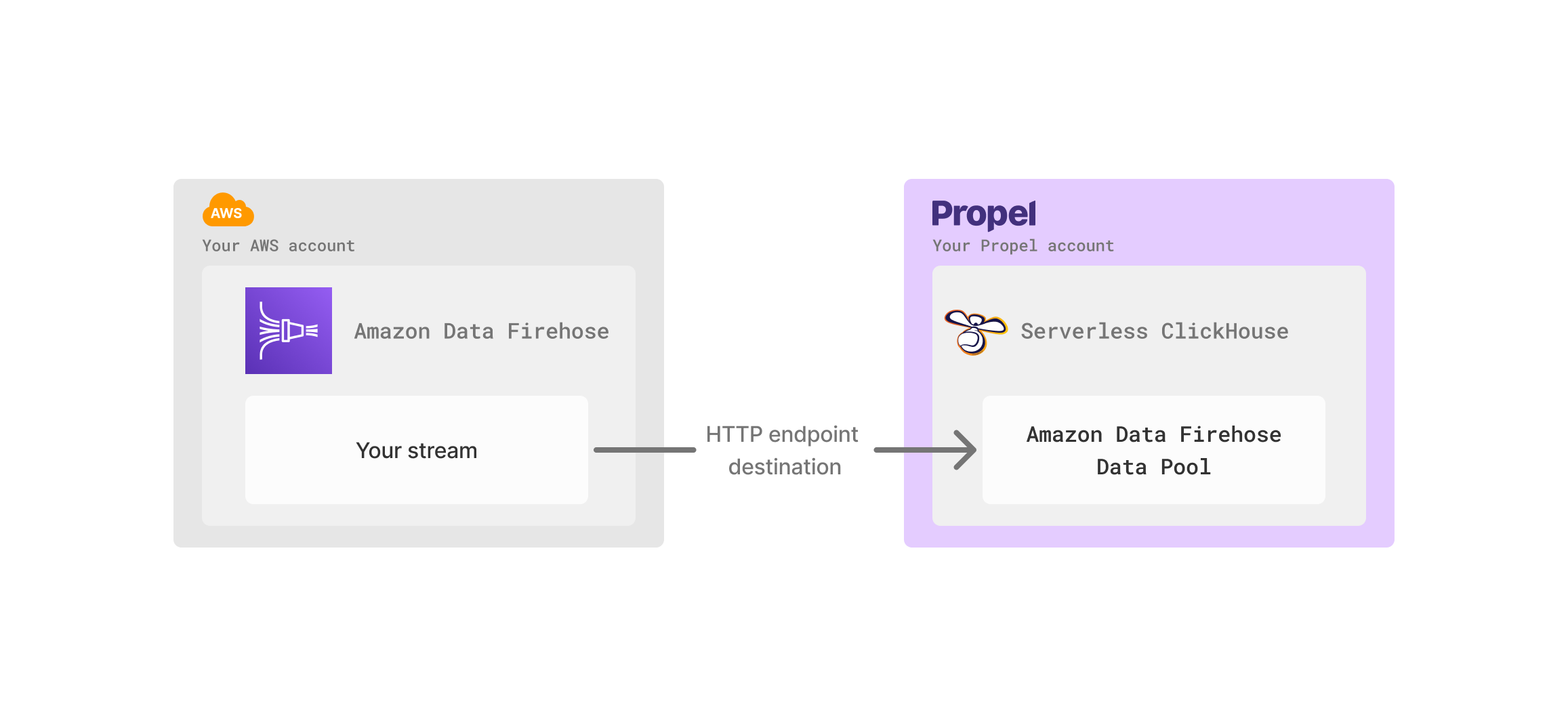

Architecture

Amazon Data Firehose Data Pools in Propel provide you with an HTTP endpoint andX-Amz-Firehose-Access-Key to configure as a destination in Amazon Data Firehose.

Features

Amazon Data Firehose ingestion supports the following features:| Feature name | Supported | Notes |

|---|---|---|

| Event collection | ✅ | Collects individual events and batches of events in JSON format. |

| Real-time updates | ✅ | See the Real-time updates section. |

| Real-time deletes | ✅ | See the Real-time deletes section. |

| Batch Delete API | ✅ | See Batch Delete API. |

| Batch Update API | ✅ | See Batch Update API. |

| Bulk insert | ✅ | Up to 500 events per HTTP request. |

| API configurable | ✅ | See API docs. |

| Terraform configurable | ✅ | See Terraform docs. |

How does the Amazon Data Firehose Data Pool work?

The Amazon Data Firehose Data Pool works by receiving events from an Amazon Data Firehose via an HTTP endpoint destination. Propel handles the special encoding, data format, and basic authentication required for receiving Amazon Data Firehose events. By default, the Amazon Data Firehose Data Pool includes two columns:| Column | Type | Description |

|---|---|---|

_propel_received_at | TIMESTAMP | The timestamp when the event was collected in UTC. |

_propel_payload | JSON | The JSON payload of the event. |

When creating an Amazon Data Firehose Data Pool, you can flatten top-level or nested JSON keys into specific columns.

Errors

- HTTP 429 Too Many Requests - Returned when your application is being rate limited by Propel. Retry with exponential backoff.

- HTTP 413 Content Too Large - Returned if there are more than 500 events in a single request or the payload exceeds 1,048,320 bytes. Fix the request; retrying won’t help.

- HTTP 400 Bad Request - Returned when the schema is incorrect and Propel is rejecting the request. Fix the request; retrying won’t help.

- HTTP 500 Internal Server Error - Returned if something went wrong in Propel. Retry with exponential backoff.

disable_partial_success=true query parameter to make sure that if any event in a batch fails validation, the entire request will fail.

For example:

Schema changes

The Amazon Data Firehose Data Pool is designed to handle semi-structured, schema-less JSON data. This flexibility allows you to add new properties to your payload as needed. The entire payload is always stored in the_propel_payload column.

However, Propel enforces the schema for required fields. If you stop providing data for a required field that was previously unpacked into its own column, Propel will return an error.

Adding Columns

1

Go to the Schema tab

Go to the Data Pool and click the “Schema” tab. Click the “Add Column” button to define the new column.

Click the “Add Column” button to define the new column.

2

Add column

Specify the JSON property to extract, the column name, and the type and click “Add column”.

3

Track progress

After clicking adding the column, an asynchronous operation will begin to add the column to the Data Pool. You can track the progress in the “Operations” tab.

Note that adding a column does not backfill existing rows. To backfill, run a batch update operation.

Column deletions, modifications, and data type changes are not supported as they are breaking changes to the schema. If you need to change the schema, you can create a new Data Pool.

Data Types

The table below shows the default mappings from JSON types to Propel types. You can change these mappings when creating an Amazon Data Firehose Data Pool.| JSON Type | Propel Type |

|---|---|

| String | STRING |

| Number | DOUBLE |

| Object | JSON |

| Array | JSON |

| Boolean | BOOLEAN |

| Null | JSON |

Limits

- Each POST request can include up to 500 events (as a JSON array).

- The payload size can be up to 1 MiB.

Best Practices

- Maximize the number of events per request, up to 500 events or 1 MiB, to enhance ingestion speed.

- Implement exponential backoff for retries on 429 (Too Many Requests) and 500 (Internal Server Error) responses to prevent data loss.

- Set up alerts or notifications for 413 (Content Too Large) errors, as these indicate exceeded event count or payload size, and retries will not resolve the issue.

- Ensure the Amazon Data Firehose Data Pool is created with all necessary fields. Utilize a sample event and the “Extract top-level fields” feature during setup.

- Assign the correct event timestamp as the default. If your event lacks a timestamp, use the

_propel_received_atcolumn. - Configure all fields, except the default timestamp, as optional to minimize 400 (Bad Request) errors. This configuration allows Propel to process requests even with missing data, which can be backfilled later.

- Set up alerts or notifications for 400 errors, as these signify schema issues that require attention, and retries will not resolve them.

- Confirm that all data types are correct. Objects, arrays, and dictionaries should be designated as JSON in Propel.

Transforming data

Once your data is in a Webhook Data Pool, you can use Materialized Views to:- Flatten nested JSON into tabular form

- Flatten JSON array into individual rows

- Combine data from multiple source Data Pools through JOINs

- Calculate new derived columns from existing data

- Perform incremental aggregations

- Sort rows with a different sorting key

- Filter out unnecessary data based on conditions

- De-duplicate rows

Frequently Asked Questions

How long does it take for an event to be available via SQL or the API?

How long does it take for an event to be available via SQL or the API?

It will depend on the buffer you set and the internal buffers Propel uses to optimize performance. It can range from 10 seconds to 2 minutes depending on the buffer size.